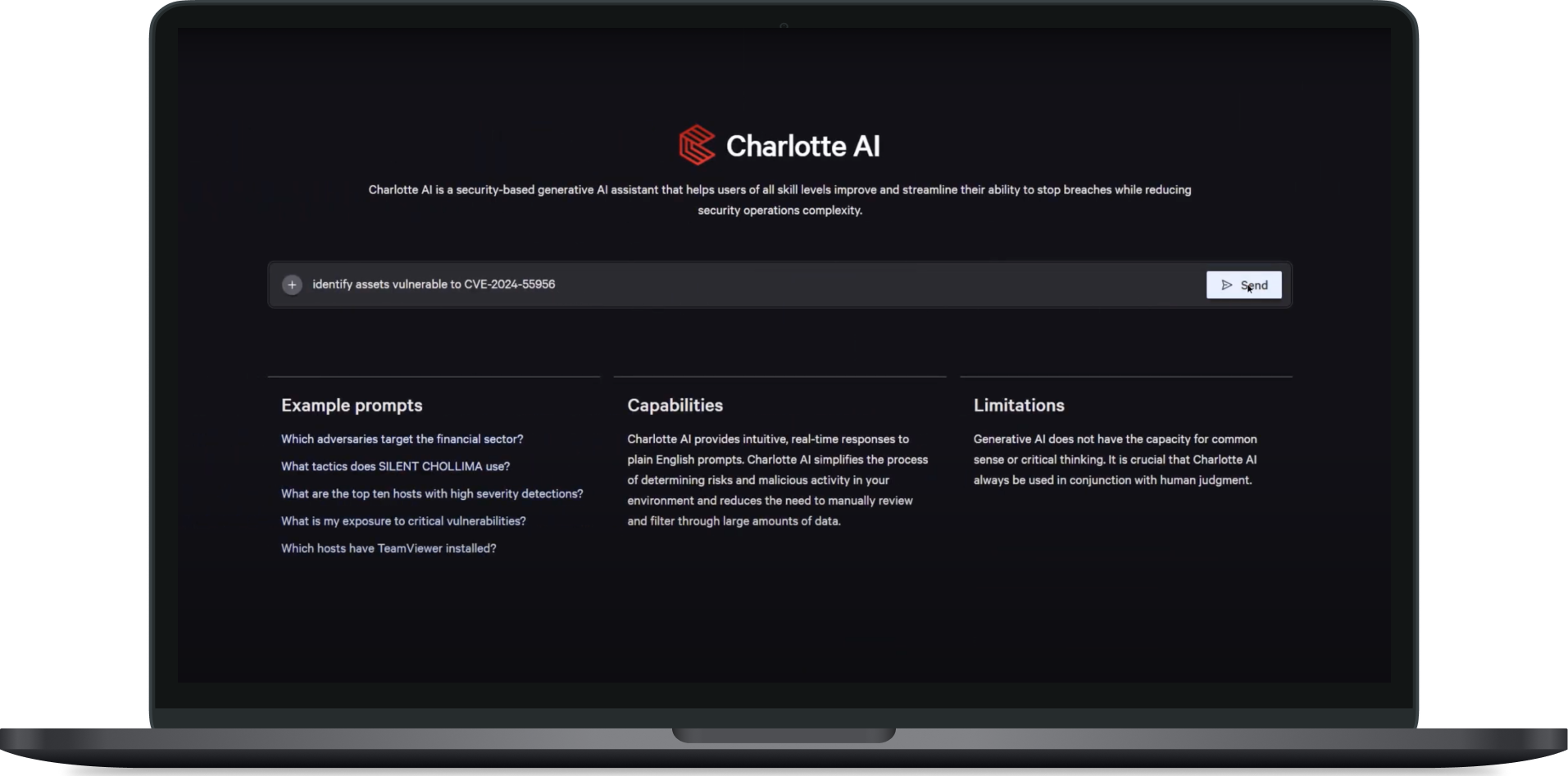

Charlotte AI

a generative AI security analyst

My role

Product design

User research

Prototyping

Design systems

Tools

Figma

Miro

Timeline

1.5 years

Note:

Due to the sensitive nature of this project, specific details and visuals have been omitted. Please contact me to discuss the project and my role in more depth.

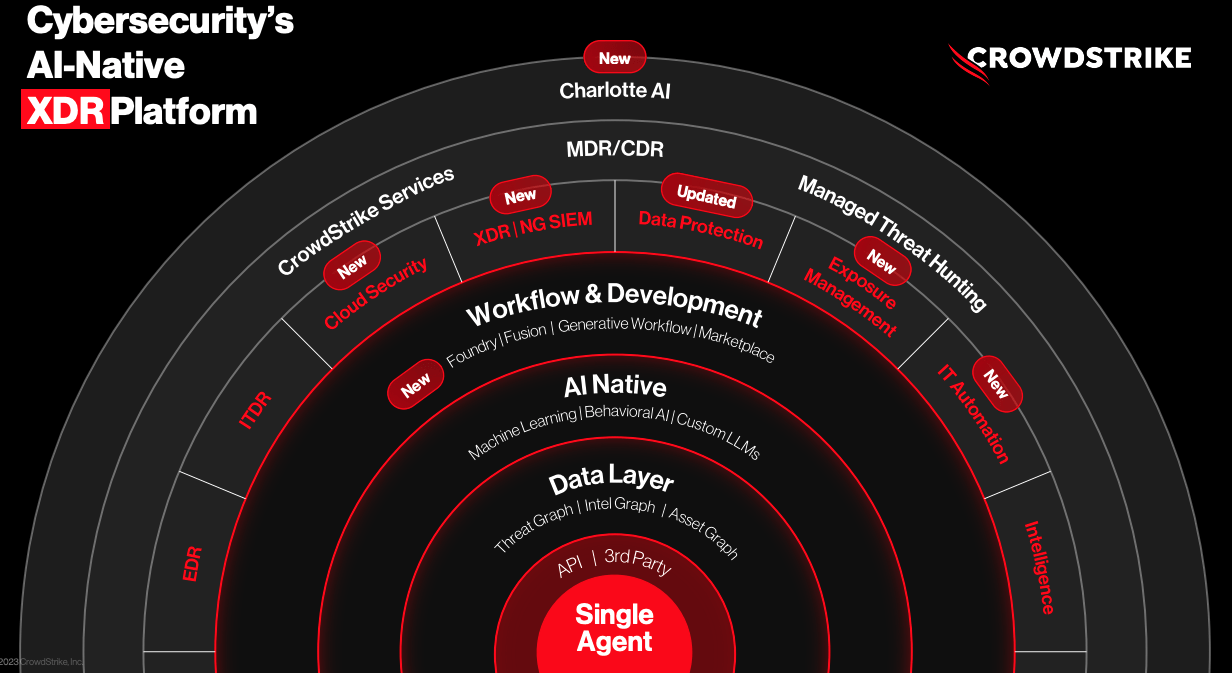

Designed, tested, and launched a generative AI chat bot that leverages the world’s highest fidelity security data

Collaborated with product, engineering, data science, users, and internal analysts to build a product for cybersecurity professionals of all levels, from tier 1 analysts to CSO’s

Adhered to tight timelines and deliverables, as well as strict requirements

Project summary

Process

Research

Conducted co-moderated feedback sessions followed by thematic analysis

Created, sent, and analyzed a chatbot expectations and concerns survey

Conducted user interviews and moderated usability sessions

Studied and analyzed competitors and other AI chat tools at length

Principles and Decisions

Helped create and adhere to Charlotte AI-specific principles to assure a user-focused, ethical, and honest product

Made features designed to provide clarity on how AI understood, processed, and delivered answers; creating transparency and trust in the product

Had to balance clarity with information density throughout the project

Design

Worked with platform and foundations design teams to audit and identify easily usable components and patterns

Performed rapid iteration cycles with developers and designers to produce required results in tight deadlines

Collaborated on-site with front-end, back-end, and data science teams.

Beta testing

Once a beta version of the product was ready, we enabled access for a select group of eager customers

We held multiple feedback sessions with each user, diving into their thoughts, feelings, and experiences

Identified multiple pain points and areas where design and engineering needed to focus on moving forward to improve clarity, function, and overall experience to align with expectations.

Users wanted to pivot to other parts of Falcon

Expectations of AI varied greatly across users and organizations

Deep distrust in AI and LLMs

Final result

After collecting feedback from our beta users, we continued to iterate and brainstorm new designs

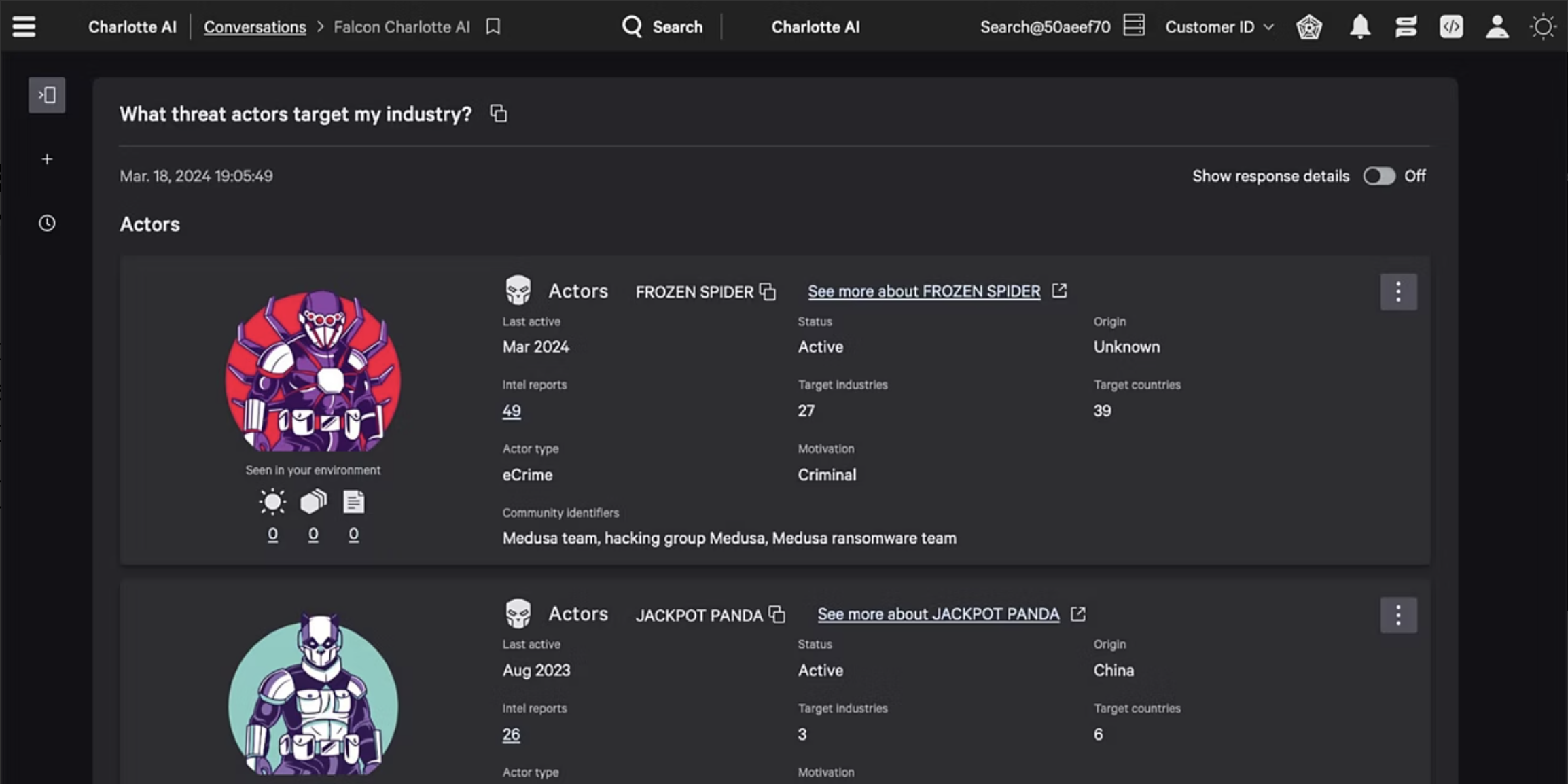

By designing features that allowed users to view the AI’s process, chain of command, and API calls, we avoided any ‘black box’ perceptions

We continued to enhance the back end architecture, improving query and response sequencing to provide better results

As response quality improved and became more robust, we were able to add more relevant information to the UI to bring clarity to the chat experience

Surfacing filters applied on search

Giving users an LLM-generated snapshot of what what done on the back end

Improved messaging around capabilities and permissions

Impact

Produced 75% faster answers about questions to customer environments

Wrote RTR queries 57% faster, empowering analysts of all levels

Adopted by multiple Fortune 500 clients, one of which commented that their “team couldn’t go a day without using Charlotte”